This Kubernetes Architecture series covers the main components used in Kubernetes and provides an introduction to Kubernetes architecture. After reading these blogs, you’ll have a much deeper understanding of the main reasons for choosing Kubernetes as well as the main components that are involved when you start running applications on Kubernetes.

This blog series covers the following topics:

Part 1: Reasons to Choose Kubernetes

Introduction to Kubernetes

Kubernetes is a portable, extensible, open source platform for managing containerized workloads and services that facilitates both declarative configuration and automation. The name Kubernetes originates from Greek, meaning helmsman or pilot. It is often shortened to “K8s,” an abbreviation that results from counting the eight letters between the "K" and the "s".

Reasons to run on Kubernetes

There are various reasons to run your applications on Kubernetes. Some of the most important ones are listed below. Most of them are technology oriented but obviously they must support your business functions too.

Applications with multiple microservices that need to work together - If you run a microservices architecture, you have two options: develop the whole infrastructure to run the services yourself or go with a platform that is built to run and manage microservices, like Kubernetes.

Fault tolerance - Kubernetes provides excellent built-in fault tolerance, which means it includes certain structures that allow your application to recover from errors gracefully and without degrading user experience.

Scalability - Kubernetes allows users to horizontally scale the total number of containers used based on the application requirements, a feature that is particularly helpful in situations where those requirements change over time. It's easy to change the number of containers via the command line, and you can also use the Horizontal Pod Autoscaler to do it.

Self healing - Self healing is a feature that is automatically provided by the Kubernetes open source system. If a containerized app or an application component fails or goes down, Kubernetes re-deploys it to maintain the desired state. Kubernetes provides self healing by default.

Cloud-provider-agnostic operation - Having an abstraction layer (Kubernetes) in between your applications and the underlying cloud platform provides the freedom to run your application on multiple clouds or easily migrate between clouds. This flexibility is extremely helpful when requirements, costs or governance issues change.

Separation of concerns - Kubernetes separates the maintenance of the K8s cluster and infrastructure from the management and deployment of applications running on it. This separation of concerns means that application teams can manage their applications and the platform teams can manage the Kubernetes infrastructure supporting them, which reduces cognitive load, streamlines the software development lifecycle and helps all teams move faster.

Kubernetes architecture

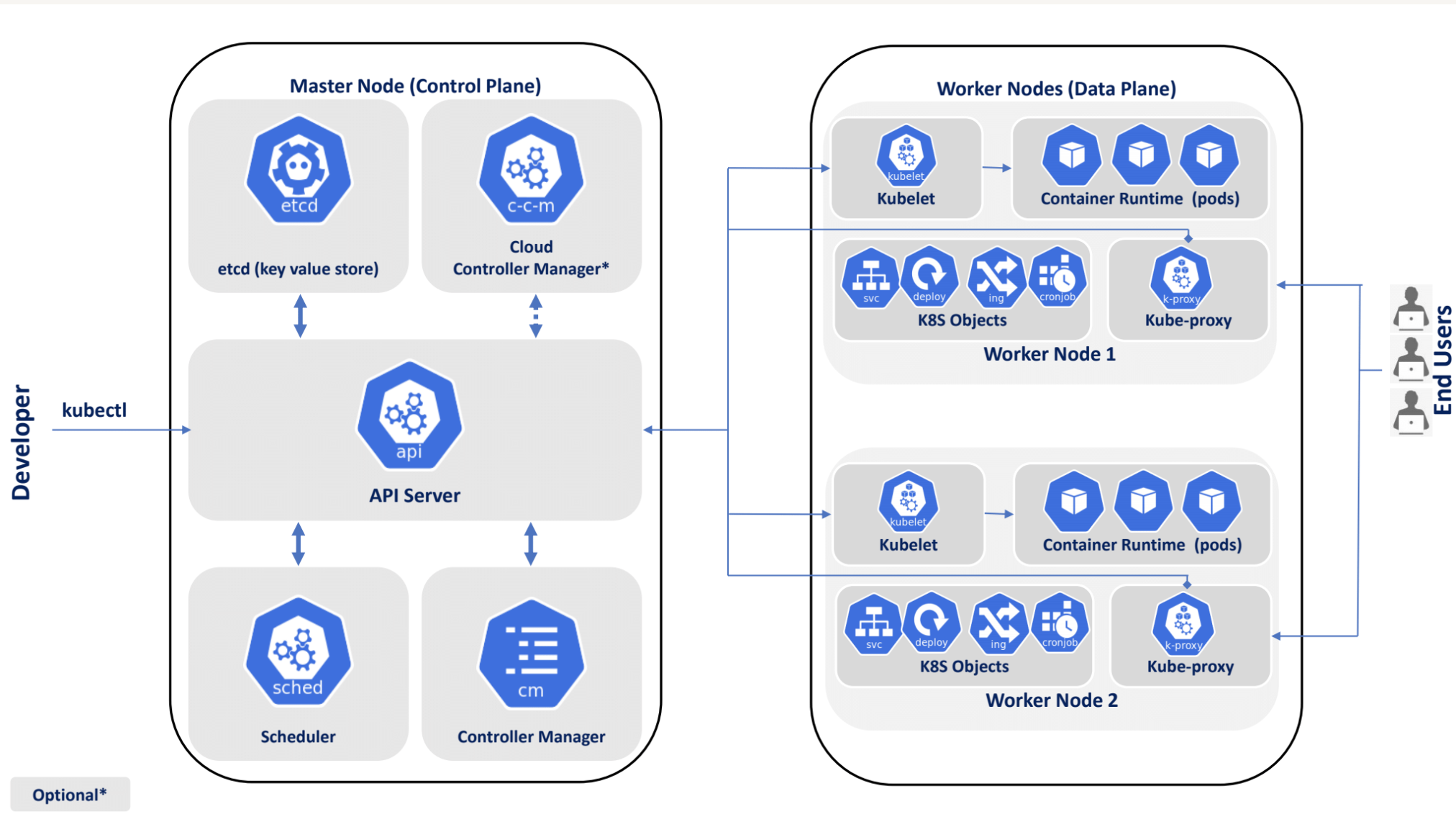

When you deploy Kubernetes, you get a cluster, which is the highest abstraction level. A cluster consists of one main (control) plane and one or more worker nodes (machines / hosts) that constitute the data plane. Both the control plane and node instances can be physical machines, virtual machines (VMs) running on premises or VMs in the cloud.

In Kubernetes, a pod is the smallest deployable unit that you can create and manage. A pod represents a single instance of a running process in a Kubernetes cluster and can contain one or more containers that share the same network namespace and file system. The primary purpose of a pod is to provide a way to run and manage containers within a Kubernetes cluster.

Understanding how the control and data planes of Kubernetes work is essential for developers, platform engineers and SREs working with Kubernetes. This knowledge helps you design more scalable, efficient and secure applications, and helps you troubleshoot network-related issues.

The next two blogs in this series dive deeper into these two important areas:

Kubernetes Architecture Part 2: Control Plane Components

The control plane is the brain of the Kubernetes system and is responsible for managing and controlling the entire cluster. It includes various components like the API server, etcd, scheduler and controller manager. Understanding these roles and interactions is essential for developing and deploying applications on Kubernetes.

Kubernetes Architecture Part 3: Data Plane Components

The data plane is responsible for managing network traffic within the Kubernetes cluster, which includes managing the network connections between containers and between the containers and external resources. The data plane runs your workloads on pods, which contain containers.

About StackState

Designed to help engineers of all skill levels who build and support Kubernetes-based applications, StackState provides the most effective solution available for Kubernetes troubleshooting. Our unique approach to SaaS observability helps teams quickly detect and remediate issues so they can ensure optimal system performance and reliability for their customers. With StackState’s comprehensive observability data, the most complete dependency map available, out-of-the-box applied knowledge and step-by-step troubleshooting guidance, any engineer can remediate issues accurately and with less toil.

As a company dedicated to helping teams succeed with Kubernetes, we want to provide useful information in as many related areas as we can. We hope this Kubernetes tutorial has been helpful for your team.

If you want to play around with StackState yourself, our playground is just a click away.

And when it’s time to set your focus on Kubernetes troubleshooting, sign up for a free trial to see how simple it can be.