In addition to the first steps in deploying and configuring Kubernetes that you are already planning, succeeding in using Kubernetes at a larger scale requires you to pay attention to the following five areas:

Building up the right skills in your SRE, DevOps or platform team to ensure application delivery, automation and performance optimization.

CI/CD and platform automation to ensure rapid and repeatable deployment of your platform and applications.

Observability and troubleshooting capabilities to help you stay in control and reduce customer impact in case of an issue.

Security and compliance to ensure a controlled environment and reliable application for your customers.

Kubernetes platform choice involves considerations for whether you run your containers through a cloud provider, in a hybrid environment or host them yourself.

Taking this broader look before you get started will foster new perspectives and well-informed decisions. All of these considerations require thought and planning if you want to make your Kubernetes platform implementation a success. Let's take a closer look at each of these areas:

1. Building up the right skills: SRE vs. DevOps vs. platform team

With every new technology you bring into the company, you need to consider all the different skills that are needed to make it a success. Kubernetes is no exception. Titles are often very fluid and differ from organization to organization, so let’s set them aside. As you start to expand Kubernetes use, there are particular areas that must be addressed.

Regular application development – For many companies, this is happening in a DevOps team where software developers create features and new capabilities. Making using of containers requires different architectures for these team members to consider and implement.

Automation, particularly pipeline automation. Pipelines push applications and containers out to the Kubernetes platform; often these types of CI/CD pipelines are still developed by the DevOps team who may or may not have Kubernetes expertise.

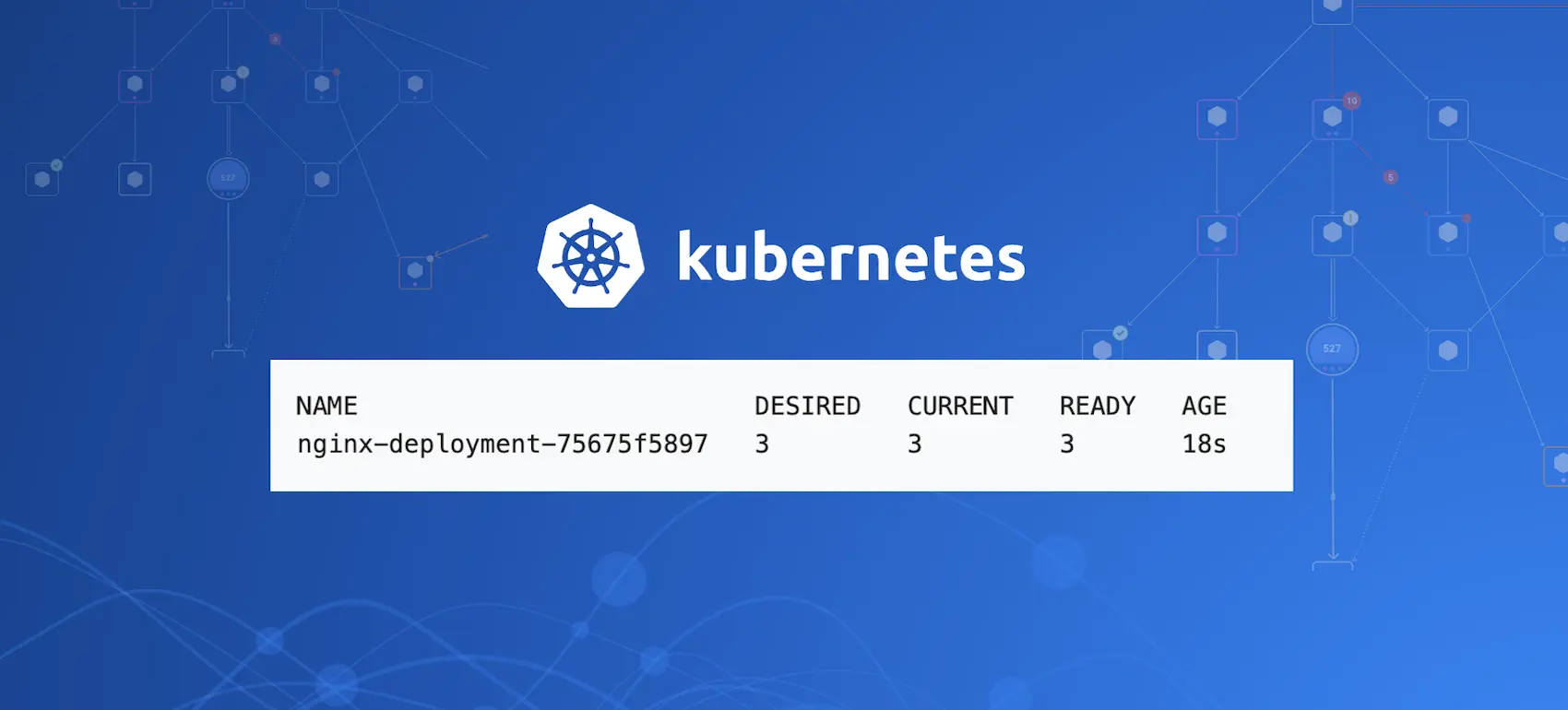

The Kubernetes platform itself – Most of the time, responsibility for Kubernetes does not reside in a single DevOps team since it is a foundation for multiple teams and requires an entirely different skill set to implement and maintain. Different automation tools and capabilities are required than for software pipelines. Most common is Helm, a Kubernetes package manager. Platform monitoring and other capabilities are part of Helm.

Application and process optimization - the role of the site reliability engineer is often to optimize the application from a performance perspective, remove toil from the process of using and maintaining it, start measuring service level indicators (SLIs) and service level objectives (SLOs), and set and track error budgets.

Tip: Have the conversation with some of your engineering leads on how to cover all these areas; it is worth the investment. Doing a small gap analysis together will spark the right conversation on where to make investments.

2. CI/CD and platform automation

Automation is crucial, as mentioned above in the skill section. With the introduction of the Kubernetes platform, an entire new landscape of automation possibilities is introduced that goes beyond application delivery. Many companies have established strong practices around CI/CD and have matured how teams develop and deliver their software.

With the introduction of the Kubernetes platform, companies need to think through practices like infrastructure as code, (automated) cost management, all new types of security scanning and also cloud storage. Automation is such an extensive area that the Cloud Native Computing Foundation (CNCF) has created an entire landscape with all the areas to consider, so you can easily pick what you need.

Tip: If you’re new to Kubernetes, assess the different areas described in the CNCF Cloud Native Interactive Landscape and have a conversation on what areas matter now and what is the right tool or framework for the job.

3. Observability and troubleshooting capabilities

At StackState we make a clear distinction between troubleshooting and observability. And although they are done by the same people and sometimes even with the same tools, they provide a different perspective.

Troubleshooting is aimed at remediating an issue as fast as possible. This is one of the key activities described in the SRE Handbook . Engineers who are responsible for operations need to have the capability to do this. The main goal is to see what is actually going wrong using the log files, Kubernetes events and/or telemetry so you can fix it quickly. Sometimes troubleshooting needs to be done by engineers who are on-call and not so familiar with all the specific technology. It’s important to make sure they have a good solution that guides them through this.

Observability is the practice of continuously understanding the state of your landscape, both the application and the underlying platform. Gathering the right metrics, traces and logs and setting alerts and dashboards to keep track of your environment helps you to understand how the system behaves. An SRE or DevOps team can set SLIs and SLOs and attach error budgets to SLOs to track how they are progressing on the goals that need to be achieved.

Tip: Our solution provides strong support for both activities. There are numerus capabilities for guided troubleshooting as well as for setting alerts to observe your entire system. StackState retrieves the right set of data through eBPF and OpenMetrics to provide an understanding of what happened.

4. Security and compliance

This is certainly a broad topic that require significant attention. Both security and compliance will require time and effort to set up correctly. Here are a few areas to consider:

Application security, in which you set up static code scanning, code reviews and also execute dynamic security testing to deliver a secure application.

Container scanning of the appropriate container images and repositories, to make sure the right containers and content are pushed into production.

Infrastructure as code, to ensure code reviews beyond application code.

A policy engine, such as Open Policy Agent , to give freedom to teams to move quickly in their deployments, but still validate what goes into production and flag issues as needed.

Compliance is all about defining the policies that need to be followed. SOC2, ISO27001 PCI-DSS or HIPAA are just a few that might be applicable to you and require attention when going to Kubernetes. Besides policy definition, companies need to make sure all change data is collected as changes are being made. The best way is to store the change data in a central location so that when the auditor comes by, a trail of evidence can be easily handed over.

Tip: Thinking through the entire chain of evidence creation and collection will take a bit of time up front, but it gives so much freedom to your teams during execution and actual usage.

5. Kubernetes platform choice

While starting to read this blog, you might have expected that the platform itself would be the first topic covered. Although your Kubernetes platform is a very important consideration, the other areas listed above influence the choice you need to make.

For example, if there is very limited knowledge available in your organization, you might want to go for a managed, hosted solution. Or if you expect your deployment will be very exotic and requires a lot from the underlying platform, you might need to keep some additional control on how to implement this. And in case of particular compliance requirements in a regulated industry, you might pick an on-prem implementation.

For all three situations mentioned above, there are two options to choose from:

- Cloud providers offering managed solutions

- On-premise and/or hybrid solutions

Cloud providers

If you are not used to running a Kubernetes platform, then going for a cloud platform managed by a supplier might be the smartest move. With all large cloud providers investing time and effort to offer Kubernetes, there is plenty of choice. The benefit of going for a managed solution is that many secondary services and integrations are available out of the box, including security, access management, integrations with CI/CD solutions and observability capabilities. The most common cloud providers are:

Google Kubernetes Engine (GKE) – Kubernetes originated at Google and GKE was the first cloud-based managed Kubernetes platform. It is also considered the most advanced.

Amazon Elastic Kubernetes Service (EKS) – EKS came to the market in June 2018 and since then has delivered an extensive ecosystem around Kubernetes, including CloudWatch for observability.

Azure Kubernetes Service (AKS) – AKS is rapidly being adopted by Azure users as the best way to deploy Kubernetes to Azure.

Each of the options above are safe choices and can be used at scale. In reality, the choice will most likely be driven by your cloud strategy.

On-premise or hybrid solutions

At its heart, Kubernetes is a container orchestration platform. Operating Kubernetes is made easy by the suppliers below. Rolling out new capabilities, scaling services, allowing easy patching are just a few core capabilities offered by hybrid solutions. Companies that don’t want to use public cloud have a wide variety of choices to pick from. These enterprise grade Kubernetes platforms can be used in your own data center, or in a hybrid situation where it is still deployed to a cloud provider but under your own control, or, finally, in your own setup and using your own operations protocols.

The most common platforms you can manage yourself are:

Red Hat OpenShift is a very extensive platform with many additional capabilities built in that large enterprise might benefit from. It is now part of IBM.

Rancher offers a mature enterprise grade version to manage your entire Kubernetes platform.

Tanzu , part of VMware, has some strong additional capabilities such as great networking and a private container repository, which might be ideal for an enterprise.

A consideration for choosing one of the options above is that these versions are often a little behind the latest Kubernetes version available. This lag does not reduce reliability or security, but if you want to benefit from the latest and greatest, it is something to consider.

Tip: StackState is agnostic to any of the choices you make, regardless of whether you need a managed version in the cloud or your own deployed platform on-premise. StackState is ideal for observing these platforms and supports the latest versions of Kubernetes.

Conclusion - Kubernetes success

Starting to roll out a Kubernetes platform is probably the simplest activity we’ve discussed here. Looking at the bigger picture, getting the right skills, ensuring repeatable pipelines and secure delivery are areas that demand attention for every organization. Sparking these conversations in your teams and at a management level will certainly improve the quality of implementation.

If you need to know more about these topics, please reach out to one of our experts.